Artificial intelligence is transforming every industry across the globe. Although AI systems are leading to efficiency and innovation, they are also raising ethical concerns, transparency issues, and security risks. To keep pace with regulatory demands and expectations of the stakeholders, organisations should embrace powerful AI governance policies.

Firms must take the initiative to address the threat of AI in an increasingly regulatory, security-centric world due to data safety and data accountability considerations. It is at this point that ISO/IEC 42001 comes in handy and convenient since it offers the structural base on which AI governance can be adopted to help in adopting the best practices internationally.

Canada have detailed information on Implementation guide for managers of Artificial intelligence systems https://ised-isde.canada.ca/site/ised/en/implementation-guide-managers-artificial-intelligence-systems

What is ISO/IEC 42001?

The ISO/IEC 42001 is a global security standard specifying successful AI adoption and broader digital transformation. It has laid down the groundwork of AI governance and regulatory alignment by defining major technological endeavours to assist organisations in developing trustworthy management systems.

This security standard involves risk management, impact assessment of the AI systems, system lifecycle management, and third-party monitoring. Since its launch in December 2023, this global standard has been a useful source of information on responsible AI systems. As the regulation has changed, ISO/IEC 42001 has acted as a pillar in assisting organisations to create innovation and compliance as well as strike a balance between the legal and social responsibility aspects.

Thus, the ISO/IEC 42001 is meant to provide a global standard of secure and ethical management of our systems. In addition to assisting businesses in navigating security gaps and staying transparent, it facilitates adherence to new standards of AI, including the EU AI Act and national laws of AI governance.

The security standard is an effective way to understand the security measure protocols of the organisations that are in technologically fast-evolving environments, have to operate under strict laws, and have their stakeholders raising more expectations.

Core Components of ISO/IEC 42001

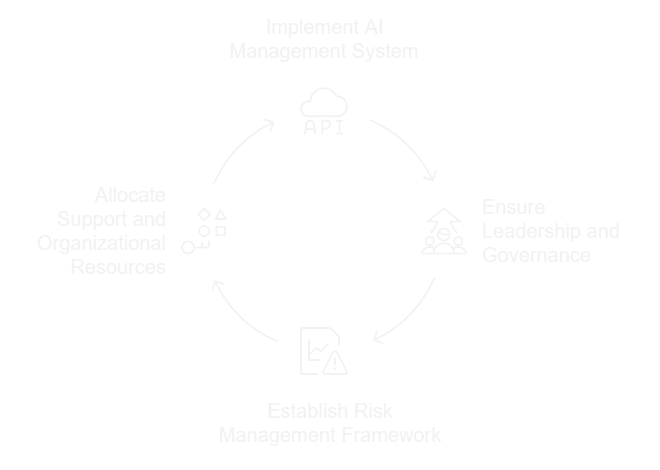

The core components of ISO/IEC 42001 address the technical operation and regulatory complexity of advanced AI deployment.

AI Management System

The artificial intelligence management system, as demonstrated by ISO/IEC 42001, is a structured framework of methods, policies, and strategies for controlling AI. It is built on the plan to implement a check model based on ISO standards to mandate explicit, documented processes and measurable KPIs to ensure ethical and reliable AI operations.

The security standard to incorporate into AI management systems includes structured processes to validate datasets, scrutinising various versions of AI models, documenting generated algorithms, enabling drift monitoring, incident management, and defining criteria for model expiration. Under the PDCA cycle, AI management systems ensure decisions across AI, from hypothesis design to training parameters.

Leadership and Governance

In clause 5 of the ISO/IEC 42001, the executive management is obliged to exercise leadership and commitment towards AI governance. It includes creating AI policies that correspond to the organisational strategy and setting up roles and responsibilities to make teams accountable. These must bear witness to technical oversight in such aspects as model clarification, upholding transparency, and execution of unbending mechanisms.

Risk Management Framework

The risk management architecture mandated by ISO/IEC 42001 enables AI system impact assessment to analyse potential risks, identify security vulnerabilities, and assess overall system security. The adversarial threat modelling covers model extraction, evasion threats, and humans-in-the-loop to ensure safe and proper risk decisions.

The configuration of the risk controls in organisations is conducted through a real-time monitoring dashboard, threshold-based alert, and rollback mechanism for restoring the previous study material version in case of unpredictable failure or drift.

Support and Organizational Resources

Optimising ISO/IEC 42001 within the organisation helps maintain technical competencies in artificial intelligence engineering, MLOPs, cybersecurity, data governance, and the interpretability of AI models. The training teams examine the bias detection framework, SHAP/LIME, optimisation, and secure ML connections.

Further, it provides GPU/TPU compute environments, protects cloud resources, and enables encrypted data storage. Establishing data governance protocols will help gain control over datasets, anonymization, retention, and high-level metrics. Maintaining documented information repositories for all models, datasets, scripts, system logs, and configuration settings.

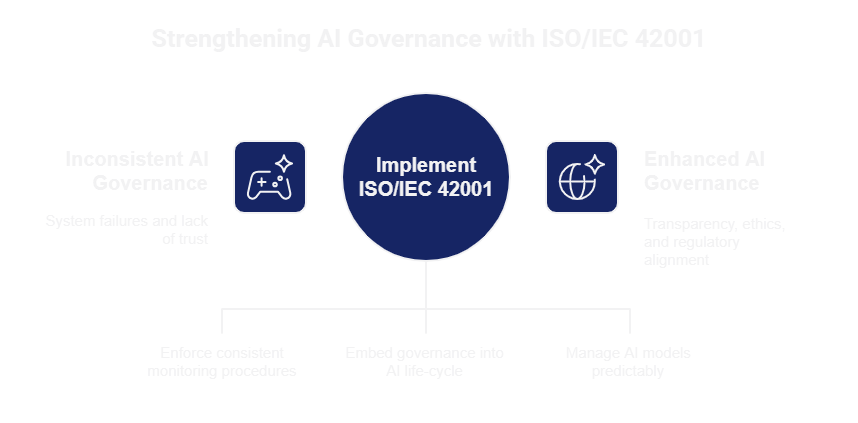

How ISO/IEC 42001 Strengthens AI Governance Across Industries?

The implementation of ISO/IEC 42001 across multiple critical industrial sectors, including healthcare, manufacturing, and standard public services, enables organisations to enforce consistent monitoring, reduce system-level failures, and build trust in artificial intelligence into their operations and activities. The AI life-cycle-driven approach embeds governance into data security model training procedures, security framework monitoring, and post-deployment monitoring. The ISO/IEC 42001 framework for accountability ensures organisations can manage AI models with predictable algorithms, technical transparency, and regulatory conformity.

Enhances Governance Transparency

ISO/IEC 42001 reinforces transparency by requiring organisations to document and expose the internal activity of the AI systems through data sheets, security model cards, and traceable algorithmic logs. This ensures that every element of the AI life cycle, from data sourcing to the logical implementation, can be detected, audited, and validated. By implementing an artificial intelligence system for management in corporate governance, you can maintain a complete audit trail that covers data-free processing operations, including engineering segments, model training parameters, and hyperparameter optimisation logs. Explainability frameworks like SHAP and LIME help in identifying model predictability that can, in turn, help in identifying uncertain risks. With the help of version control repositories, you can now record model iterations, changes in data sets, and rift configurations. For industries with compliance obligations, medical diagnostics, financial risk scoring, or automated screening, these transparency artefacts help in maintaining a defensible AI mechanism across the industry.

Embeds Ethics and Accountability

Mandating of ISO/IEC 42001 towards the formation of an AI governance structure, including ethics committees, a model validation board, and a responsible AI leadership role. The security standard requires organisations to incorporate fairness, safety, and human monitoring directly into the AI system’s technical design. Technical measures that will incorporate ethics include fairness controls, human-in-the-loop control, responsible dataset governance, and ethical risk scoring integrated into the system impact assessment. The accountability becomes possible through role-based access control, documented responsibilities, along with audit logs that will prevent uncontrollable model deployment to ensure ethically aligned outcomes.

Reduces AI Governance Failures

Artificial intelligence can fail due to algorithmic bias, model hallucination, operational disruptions, and adversarial exploitation, all rooted in the absence of structured supervision. To address these failures through properly mechanised technical safeguards, there are pre-deployment risk examinations, adversarial robustness, continuous oversight using strategic drift detection, including KL divergence, and KL tests. With these controls in place, the ISO/IEC 42001 security standard reduces the frequency and risk of AI-driven operational failures across industry.

Enables Regulatory Alignment

The correspondence of ISO/IEC 42001 to Global regulations such as the European Union AI Act, GDPR, HIPAA, and the financial complaints rule, as well as sector-specific standards of safety, has empowered organisations to display technical control, documentation, and responsibility of AI systems. The security standards can be seen as a standard set of compliance, which provides the most significant technical requirements that may be expected in accordance with the regulatory provisions. The security standards enable alignment of the documentation structure to satisfy legal audit requirements. The comparative analysis of the risk classification framework with the regulatory risk tier and data governance is controlled to ensure minimization and privacy compliance. The standard manages compliance and formalises AI lifecycle governance in highly regulated sectors, including banking, insurance, aviation, and healthcare.

Ensures Sustainable Operational Control

ISO/IEC 42001 defines operational governance through the life cycle, MLOPs integration, and a continuous risk assessment strategy. This ensures that the AI system maintains stability, is legally compliant, and remains operational even as data consumer behaviour and external conditions evolve. Security sustainability is achieved through continuous performance evaluation, real-time dashboards, and telemetry. The automotive re-training pipeline model, retirement criteria, resource governance, and change management workflow follow it.

Conclusion

ISO/IEC 42001 offers a stringent lifecycle-driven framework to enable organisations to govern a system with proper maintenance of transparency, security, and ethical responsibility. It is the inclusion of systematised risk management, ongoing observation, and stringent reporting as per the ISO/IEC 42001 that guarantees consistent adherence as well as the alignment of organisational objectives and artificial intelligence. The implementation of ISO/IEC 42001 has proved to be vital as companies scale AI in key operations, as a move to develop trust and avoid governance failures. Matayo provides ISO/IEC 42001 security certification to any company that needs innovative, legally compliant AI solutions and powers responsible AI implementation.